NEH-supported digital humanities projects pave the way for comprehensive computational analysis of nearly 17 million texts in the HathiTrust Digital Library

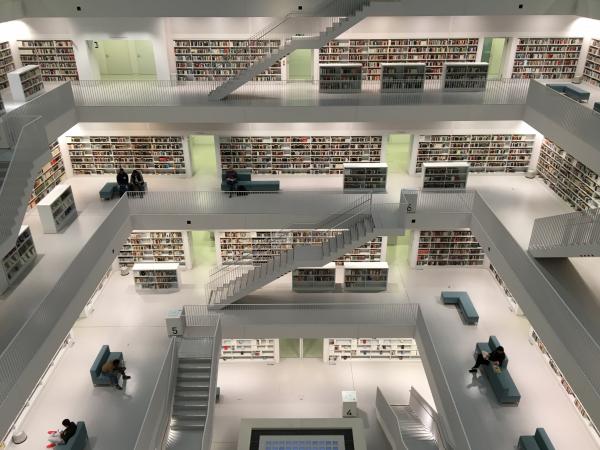

Unsplash/ Tobias Fischer

Unsplash/ Tobias Fischer

This fall, HathiTrust, the massive digital library maintained through a global partnership of more 140 major academic institutions, released a set of text and data-mining tools that enable data analysis of its millions of digitized titles. For the repository and the scholars who depend on it for their research, the new tools were a major breakthrough.

Previously, these computational tools were available only for the portion of the HathiTrust collection in the public domain—hampering research on approximately 60 percent of the repository and limiting large-scale analysis of U.S. publications to texts published before 1923.

Now, however, building on tools developed through a number of NEH grant-supported projects, HathiTrust can allow researchers computational access to the entire collection of 16.7 million volumes, including modern works still under copyright, for data mining and computational analysis. These new platforms will open up new avenues for exciting research in the humanities, social sciences, and other fields.

Designed to adhere to U.S. copyright law, the new HathiTrust Research Center analytic tools allow for “non-consumptive research” on the entire HathiTrust corpus. This means that users can apply data mining, visualization, and other computational techniques to titles without being able to download the content of individual copyrighted books.

As a result, users can now perform searches of the entire HathiTrust corpus, including over 10 million tiles from the 20th and 21st century that are still under copyright, says Mike Furlough, executive director of HathiTrust. “We think it’s one of the most significant applications of fair use to support research in a long while. We’re also extremely grateful for support from the National Endowment for the Humanities that have allowed us to develop these services,” he says.

Three NEH-funded research projects in particular played significant roles in advancing the HathiTrust platform:

- Exploring the Billions and Billions of Words in the HathiTrust Corpus with Bookworm: HathiTrust + Bookworm Project: Led by J. Stephen Downie (University of Illinois and the HathiTrust Research Center), Erez Lieberman-Aiden (Baylor College of Medicine), and Ben Schmidt (Northeastern University), the NEH-funded HathiTrust + Bookworm project enabled development of open source tools to view word usage trends across the entirety of the HathiTrust corpus. Users can then “drill down” on that word to read the relevant part of the underlying texts. (For example, this Bookworm search shows a peak in use of the word “polka” in magazines and other publications around 1854, coinciding with the wave of “polkamania” that swept Europe and the United States.) The Bookworm project, says Furlough, “helped develop the workflow and rationale behind HTRC’s Extracted Features Dataset, a key data resource for researchers, and was the first analytical tool extended to the full HathiTrust corpus.” Watch a 2014 “lightning round” explanation of the HathiTrust + Bookworm project.

- Textual Geographies: The NEH-funded Textual Geographies project lets users mine HathiTrust texts for references to specific locations and create maps and other visualizations of geographic data. The project relies on natural language processing and geocoding techniques to associate spatial data with textual references within the 16 million-item corpus. This allows, for example, American literature scholars to track how the western United States was portrayed in nineteenth-century fiction, or historians of science to map the natural environments of scientific interest over time by analyzing the locations mentioned in geology, biology, or ecology textbooks. “The access HTRC provides to in-copyright materials is especially valuable,” says Matthew Wilkens of Notre Dame University, who directs the Textual Geographies project, “because it allows us and our users to explore research questions across centuries of printed history down to the present day. Giving the same access to all users will accelerate the pace of discovery, democratize large-scale research, and foster digital humanities work that engages the twentieth- and twenty-first centuries. I can’t wait to see what people do with this unbelievable resource.” Watch a 2016 “lightning round” explanation of the Textual Geographies project.

- Text in Situ: Reasoning About Visual Information in the Computational Analysis of Books: Led by Taylor Berg-Kirkpatrick (Carnegie Mellon University) and David Bamman (University of California, Berkeley), the NEH-funded Text in Situ project allows for the computational analysis of visual information about printed books. Using Shakespeare’s First Folio at the Folger Shakespeare Library as a test case, researchers were able to analyze the physical layout of digitized texts to identify visual cues in typesetting and document structure. This makes it possible to incorporate the visual context of books—such as typography, marginalia, chapter structure, or physical damage to a page—in large-scale processing of machine-read text. Extension of these tools will enable, for example, study of historical patterns in how authors and editors carve up important concepts within indices, or exploration of changing patterns in advertisements. Watch a 2018 “lightning round” explanation of the Text in Situ project.